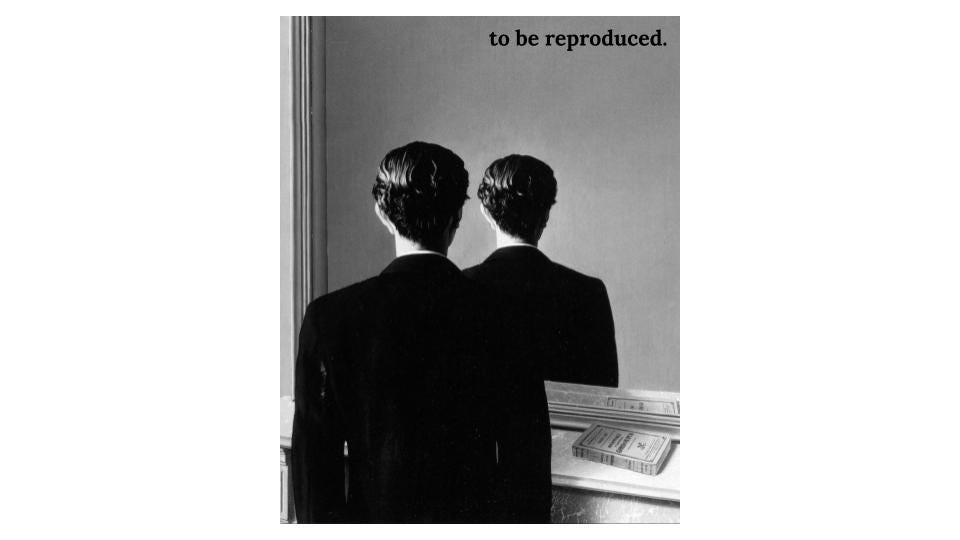

To Be Reproduced.

a transparent look into the origins of OpenAI. Part 1.

Mirror, mirror, on the wall.

Is this not the greatest startup of them all?

Around $100 billion dollars of mirrors are sold each year.

The mirror making process begins with sand, which is refined into silica through purification.

Silica is transformed into glass by heating it to extreme temperatures to melt it into a liquid state.

When glass arrives at the mirror factory, it is placed on a conveyor belt where extensive cleaning takes place to remove all impurities.

The final steps consist of applying a reflective aluminum layer, applying a protective coating (usually copper and or another type of paint), then drying and cutting the mirror into a consumer ready shape.

Mirror making in the Venetian era was a slow, dangerous, and time-consuming process that took anywhere from 3 to 6 weeks.

Mirror making today takes less than a day.

Very few people in our world consider mirrors as “technology”, but to a 14th century civilian, the reflective glass was the most cutting edge tech on the market. In some societies, a mirror was nothing short of magic, a futuristic medium that provided a clear window into human appearance.

To the 14th century man, a mirror was what artificial intelligence is to the 21st century man.

Elon Musk was hiding in the closet.

He was trying to get away from the noise coming from downstairs at a house party in Los Angeles. The Skype call he was on was much more important than any frivolous socializing that evening. Musk spent an hour cramped up in the closet trying to convince Demis Hassibis not to accept Google’s acquisition offer.

Unfortunately, Hassibis had already made up his mind.

In January 2014, Google acquired London-based AI lab DeepMind Technologies for between $400 million and $650 million.

Elon Musk was terrified.

You are probably wondering who Demis Hassibis is.

Demis is the founder of DeepMind. He is an academic obsessed with AI and the possibility of technology outpacing humans. He first met Elon Musk in 2012, and during their discussion, Demis argued that more advanced AI would surpass human intelligence and pose a threat to our existence. Even Musk’s plans for space colonization would not work because “superintelligence would simply follow humans into the galaxy.” After their conversation, Musk invested $5 million into DeepMind to keep up with Hassabis’ progress.

You are probably wondering why Musk was terrified after making millions from his investment when Google acquired DeepMind.

Elon believes that Google is irresponsibly chasing profits at the expense of human existence, and well, he also believes that Hassabis is a superintelligence supervillain.

DeepMind isn’t just unethical in his worldview, it is DeepEvil™.

(Hassabis) literally made a video game where an evil genius tries to create AI to take over the world, and ( ) people don’t see it. And Larry? Larry thinks he controls Demis but he’s too busy ( ) windsurfing to realize that Demis is gathering all the power”

Elon Musk on DeepMind, summer of 2016

Elon’s paranoia about Demis is the entire reason OpenAI was formed.

Sam Altman was trying to break out.

The startup he founded at Y-Combinator was losing steam and the window for a successful outcome seemed to be slowly closing.

Altman dropped out of Stanford to work on Loopt full time, and raised money from top venture capital funds under the guidance of Paul Graham, Y-Combinator’s founder.

Loopt was a social network that used location tracking to notify users when they were close to friends or to recommend restaurants. The startup failed to generate significant traction outside of fundraising and a few successful enterprise partnerships, but Sam was a mastermind when it came to leveraging media attention into bigger things. Fundraising was his superpower.

Earning trust from the people closest to him was his weakness. At two different points during Loopt’s lifetime, executives at the company requested that the board fire Altman for his compulsive dishonesty and selfish tendencies. Both times, the Loopt board sided with Altman, likely because of his ability to fundraise and generate media buzz.

Sam ended up bulldozing his way into a $43M acquisition. Loopt finally made it.

He personally netted $5M at the age of 26 and built a network of Silicon Valley power players in the process.

With the new money came new problems.

The lifestyle transformation was violent - Sam began to live more like a popstar than a technology entrepreneur.

His vices included Koenigsegg and ketamine, a potent duo.

Nonetheless, he maintained a generosity that often touched others. He was known to invest liberally into his friends’ ventures and extend hospitality to those in need.

He founded Hydrazine Capital, an investment fund, with his brothers Jack and Max.

Jack would go on to become a well known investor in Silicon Valley circles, most recently raising a $275M solo venture capital fund “in a mere week”.

Altman’s mentors were PG and PT, Paul Graham and Peter Thiel, two of the most powerful people in the technology investing business.

In 2014, Paul Graham would pass the Y-Combinator President torch to Sam Altman.

Altman now had unparalleled access to some of the most promising startups in the world.

During this time he invested in more than four hundred companies through his personal funds, Hydrazine, and Y-Combinator.

It was during his time at YC that Altman began talking to Elon about building safer artificial intelligence.

In May 2015, Altman emailed Musk:

///

been thinking a lot about whether it’s possible to stop humanity from developing ai

i think the answer is almost definitely not

if it’s going to happen anyway, it seems like it would be good for someone other than google to do it first

///

Musk agreed that a conversation was worth it.

They met at the Rosewood Hotel on Sand Hill Road.

Elon was late.

A group of artificial intelligence leaders dined with Musk and Altman in a private room overlooking the pool pictured above.

The men there were Dario Amodei, Greg Brockman, and Ilya Sutskever.

All five agreed to join forces and build a non-profit AI company that could be better and safer than Google’s DeepMind.

By the end of the night, OpenAI was born.

The complexities of OpenAI’s business model can be broken down into a few key concepts.

Large language models (LLMs), infrastructure, and applications.

Large language models are the software foundation of AI, an artificial intelligence system that is trained on large datasets to process, understand, and generate human language.

The LLM making process begins with data. A lot of it. Every book, article from the Internet, or post from Reddit quite literally represents a grain of sand that is refined into a vast large language model dataset. For context, ChatGPT 4 was trained on 1,000 terabytes of text.

That’s 500 billion pages.

If you laid out 500 billion pages across New York City, it would cover the metro three times over.

The vast dataset is first cleaned up and labeled to remove impurities.

Most LLMs use the “transformer neural network” architecture as an algorithm.

Data is poured into the transformer neural network and internal parameters known as “weights” are adjusted to reduce the error rate.

The model is then tested and refined continuously by humans in a process called reinforcement learning from human feedback (RLHF).

Large language models are the knowledge base powering the AI economy, and everyone from startups to S&P 500 companies pay OpenAI for access to APIs to run their own AI applications on top of models like GPT-5, GPT-4, DALL-E, and Whisper. LLMs are essentially OpenAI’s wholesale business. The API is priced based on usage, but we’ll cover more on this later.

The application layer is the user interface that everyday hustlers use to get their homework done. This is ChatGPT’s free product, their $20 ChatGPT Plus product, and other specialized tools.

Between LLMs and applications, OpenAI pulls in a reported $10B ARR ($830M+/month).

The infrastructure layer is the physical chips, supercomputers, and data centers that allow OpenAI to process such large amounts of data and power the neural networks to continually form new connections. Infrastructure is dominated by NVIDIA, and it’s the reason OpenAI needs to raise tens of billions of dollars every year for survival.

To paint a clear picture of how incredible infrastructure cost is, GPT-5’s development used at least 35,000 NVIDIA H100 GPUs, each priced at an average of $30,000. Conservatively, that’s $1B on chips.

The training compute, meaning raw power and electric energy needed to train the model, conservatively hit $500M in spend considering analysts estimated it cost 10x+ compared to GPT-4.

Data centers are trickier to work into this analysis but OpenAI and Microsoft both spend disrespectful amounts of money on constructing larger and larger data centers with the hope of developing increasingly intelligent models that can prove to be infinitely useful from an economic point of view. OpenAI’s “Stargate” data center project involves a reported total investment of $500B.

Artificial intelligence is the most expensive business in the world.

Elon knew they needed more money.

“We need to go with a much bigger number than $100M to avoid sounding hopeless relative to what Google or Facebook are spending. I think we should say that we are starting with a $1B funding commitment. This is real. I will cover whatever anyone else doesn’t provide.”

Elon Musk to OpenAI founders, November 2015

Musk did not cover the funding commitment.

Over the next year, Sutskever and Brockman started to realize just how much more money was needed. The largest bottleneck for OpenAI was compute, which was mostly contingent on the number of computer chips available. Their research indicated that scaling the amount of chips was the fastest way to developing superintelligence in a reasonable timeframe. OpenAI needed as many chips as possible, specifically GPUs, or graphics processing units.

They needed billions, yesterday.

In the summer of 2017, Brockman and Sutskever began to talk with Altman and Musk about the necessity of forming a for-profit structure to bring in serious investors and scale the infrastructure necessary to even have a chance of competing with Google.

Altman was considering running for California governor at the time, and he made it clear that he would need to be the CEO if they were to become a for-profit company.

Musk was running Tesla and SpaceX, and he also made it clear that he would need to be the boss and have majority equity.

Sutskever and Brockman were torn between the two options.

In an effort to resolve the matter, Sutskever sent an email to the two potential CEOs:

///

Elon: We really want to work with you. We believe that if we join forces, our chance of success in the mission is the greatest. You are concerned that Demis could create an AGI dictatorship. So are we. So it is a bad idea to create a structure where you could become a dictator if you chose to.

Sam: When Greg (Brockman) and I are stuck, you’ve always had an answer that turned out to be deep and correct. We don’t understand why the CEO title is so important to you. Your stated reasons have changed, and it’s hard to really understand what’s driving it. Is AGI truly your primary motivation? How does it connect to your political goals? How has your thought process changed over time?

There’s enough baggage here that we think it’s very important for us to meet and talk it out. If all of us say the truth, and resolve the issues, the company that we’ll create will be much more likely to withstand the very strong forces it’ll experience.

///

Elon wasn’t going for any of that.

He responded in ten minutes.

“Guys, I’ve had enough. This is the final straw. I will no longer fund OpenAI until you have made a firm commitment to stay or I’m just being a fool who is essentially providing free funding to a startup. To be clear, this is not an ultimatum to accept what was discussed before. That is no longer on the table.”

-Elon

And that, ladies and gentlemen, is how Sam Altman emerged as the CEO of OpenAI.

Of OpenAI’s announced $1B fundraise, it only received roughly $130M, with a little more than $40M coming from Musk.

Altman needed to fundraise billions immediately, which was an increasingly uphill climb without Elon’s stamp of approval.

It took some time, but eventually OpenAI began to have serious discussions with Microsoft.

When it seemed everyone was on board for a $1B investment, Bill Gates wouldn’t sign off.

The projects OpenAI showed Microsoft execs didn’t move Gates one bit. An AI computer program that could outperform humans in a video game. A robotic hand that learned to solve a Rubrik’s cube.

What Gates wanted was an AI model that could help with research.

OpenAI’s GPT-2 wasn’t ready for a demo. So researchers at the company went all hands on deck for a month in order to please Bill Gates.

The team flew to Seattle in April of 2019 to give Gates a demo.

Three months later, Microsoft announced a $1B investment into OpenAI.

Dario Amodei was concerned about safety.

Altman wanted GPT-3 to be released on an accelerated timeline, but Amodei wanted to ensure that the company’s original mission of building safe intelligence was not compromised.

Their dissonance was the direct result of differing incentives.

Altman, the fundraiser, made a number of promises to Microsoft as to the technologies it would receive in exchange for their billion dollar investment; therefore, he was strongly incentivized to compress timelines and ship products as quickly as possible.

Amodei was a deeply technical artificial intelligence researcher, who, unlike Altman, did not come from the dealmaking world. He dedicated his existence to exploring new frontiers in AI/ML, and his most recent research obsession was ensuring that safety was a priority, given the non-zero probability that AI could one day become as intelligent as humans.

Altman was worried about unlocking more money from Microsoft while Amodei was worried about artificial intelligence becoming the next nuclear bomb.

The rift widened as time went on. At the climax of their animosity in 2020, Amodei began to describe Altman’s behavior as “psychological abuse” and “gaslighting”.

Later that year, in a virtual video meeting, Dario Amodei addressed the OpenAI team and announced he was leaving to start a new LLM company.

He founded Anthropic, which is now one of OpenAI’s most fierce competitors.

Of the five original OpenAI founders that met at the Rosewood, only three remained.

Altman, Brockman, and Sutskever.

Appendix:

Not To Be Reproduced is a 1937 surrealist painting by Belgian artist Rene Magritte. The painting depicts Edward James, a patron of Magritte’s, standing before a mirror, but his reflection shows the back of his head instead of his face, creating a paradox and emphasizing the notion that our true selves cannot be fully captured or reproduced in any form, be it a mirror reflection or a photograph.